|

I am a PhD student at the Language Technologies Institute at Carnegie Mellon University, working with Prof. Eric P. Xing. I also work closely with Prof. Zhiting Hu. My research centers on world models and agent models, including topics like multimodality, reasoning & planning, representation, self-supervised learning, and reinforcement learning. World models are generative models that simulate future states of the world given proposed actions, while agent models are reasoning systems that select actions to achieve specific goals. I'm particularly interested in how the interplay between these models can help us move towards general-purpose intelligence. I completed my MS at CMU's Machine Learning Department. Before that, I worked as a data scientist in industry following an internship at Baidu Research, where I was advised by Prof. Hui Xiong. I earned my undergraduate degree from Columbia University, with a double major in Mathematics-Statistics and Computer Science. |

|

Earlier News

|

|

|

|

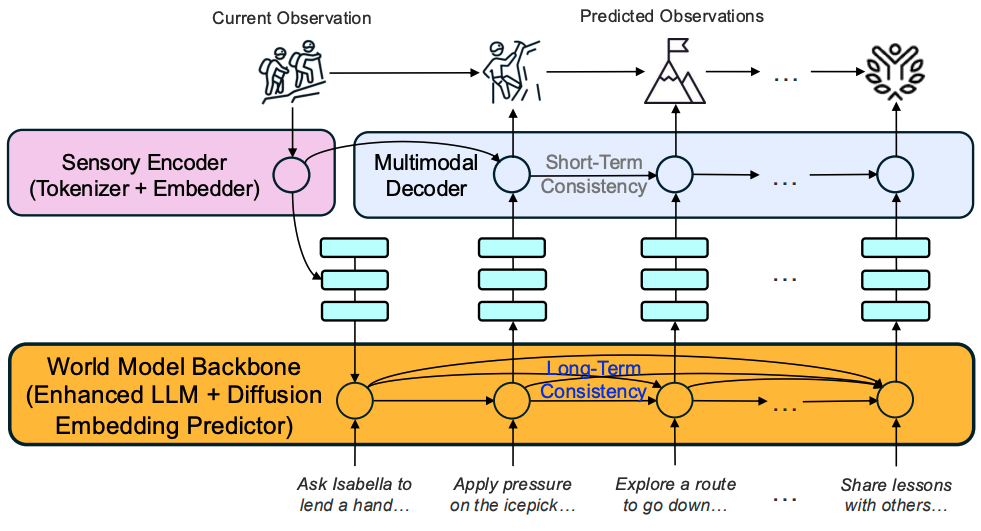

Eric Xing*, Mingkai Deng*, Jinyu Hou, Zhiting Hu Arxiv 2025 paper This position paper critiques several schools of thoughts on world modeling, and proposes a new architecture for a general-purpose world model, with an outlook of a Physical, Agentic, and Nested (PAN) AGI system enabled by such a model. |

|

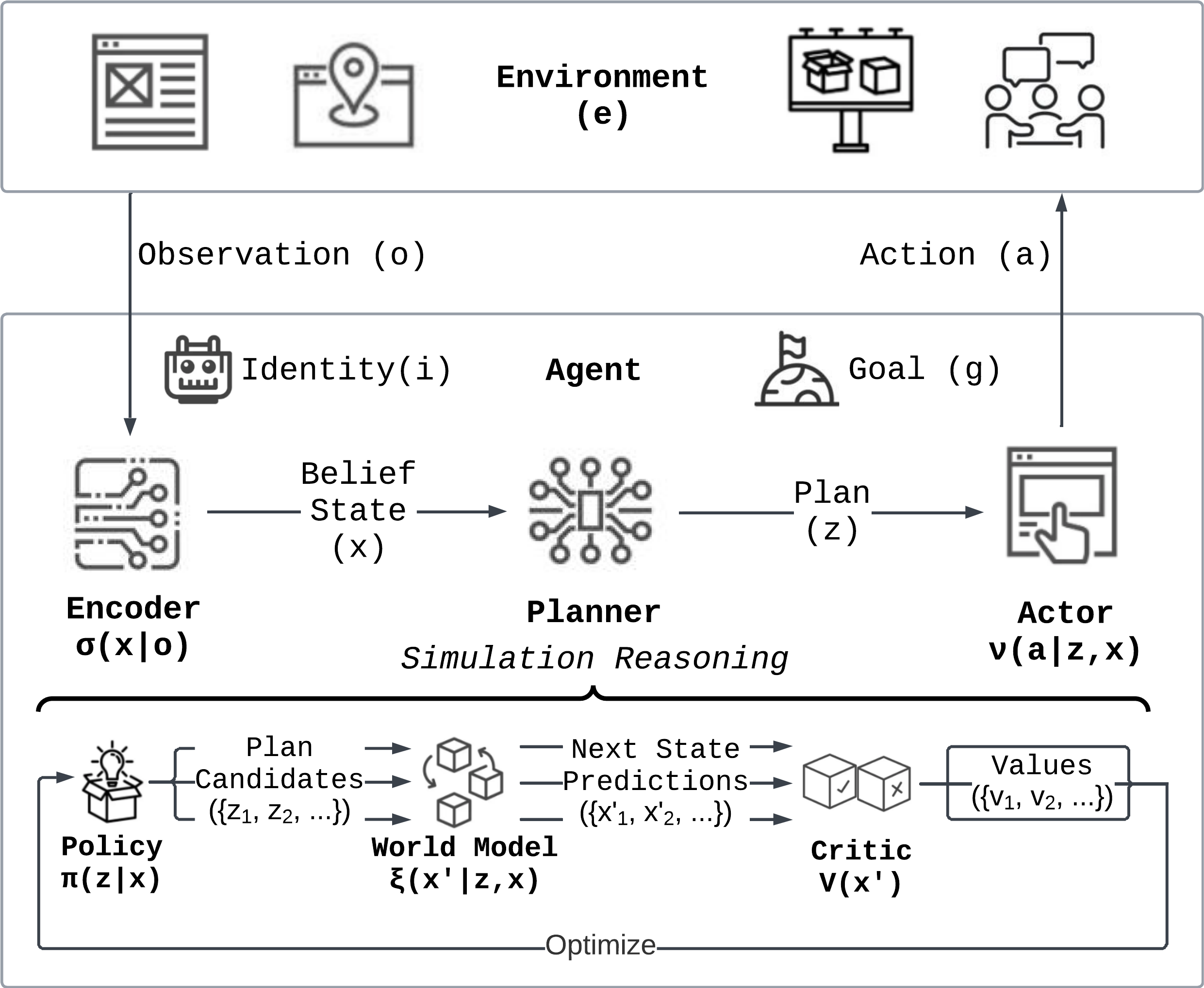

Mingkai Deng*, Jinyu Hou*, Zhiting Hu, Graham Neubig, Hongxia Jin, Yilin Shen, Eric P. Xing Berkeley LLM Agents Hackathon (Winner of Fundamental Track) paper (coming soon) / blog post / code / demo A general architecture for optimal goal-oriented agent based on simulation with LLM-based world model. The web agent based on SimuRA, ReasonerAgent-Web, shows up to 124% improvement in web browsing tasks. |

|

|

Zhengzhong Liu*, Bowen Tan*, Hongyi Wang*, Willie Neiswanger, Tianhua Tao, Haonan Li, Fajri Koto, Yuqi Wang, Suqi Sun, Omkar Pangarkar, Richard Fan, Yi Gu, Victor Miller, Liqun Ma, Liping Tang, Nikhil Ranjan, Yonghao Zhuang, Guowei He, Renxi Wang, Mingkai Deng, Robin Algayres, Yuanzhi Li, Zhiqiang Shen, Preslav Nakov, Eric Xing Arxiv 2025 paper / code / data / base model / chat model A 65-billion parameter, 360-degree open-source LLM that surpasses LLaMA-65B and rivals LLaMA2-70B, while requiring fewer FLOPs and tokens. |

|

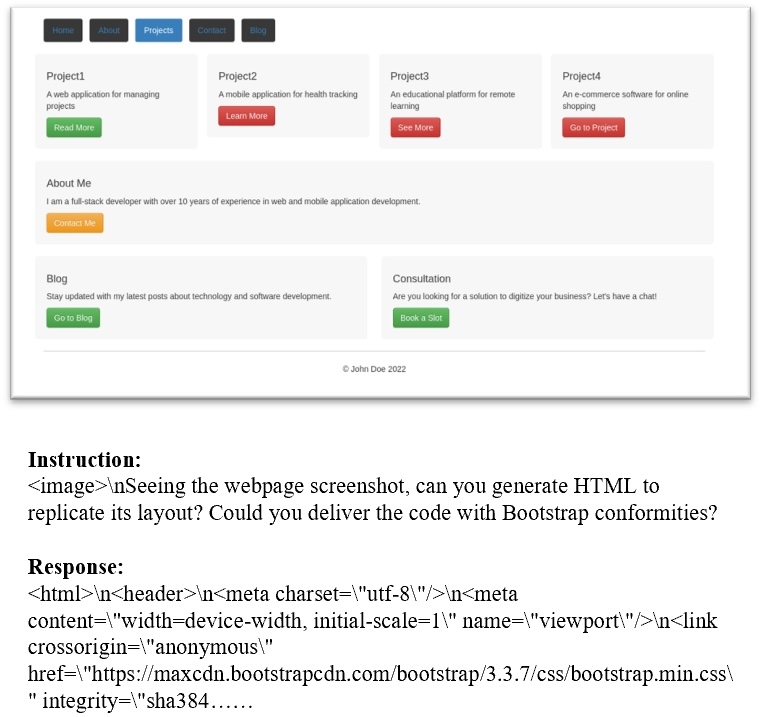

Sukmin Yun*, Haokun Lin*, Rusiru Thushara*, Mohammad Qazim Bhat*, Yongxin Wang*, Zutao Jiang, Mingkai Deng, Jinhong Wang, Tianhua Tao, Junbo Li, Haonan Li, Preslav Nakov, Timothy Baldwin, Zhengzhong Liu, Eric P. Xing, Xiaodan Liang, Zhiqiang Shen NeurIPS 2024, Datasets and Benchmarks website / paper / code / data A large-scale webpage-to-code dataset which enhances the webpage understanding and HTML code translation abilities of MLLMs without compromising general visual capabilities. |

|

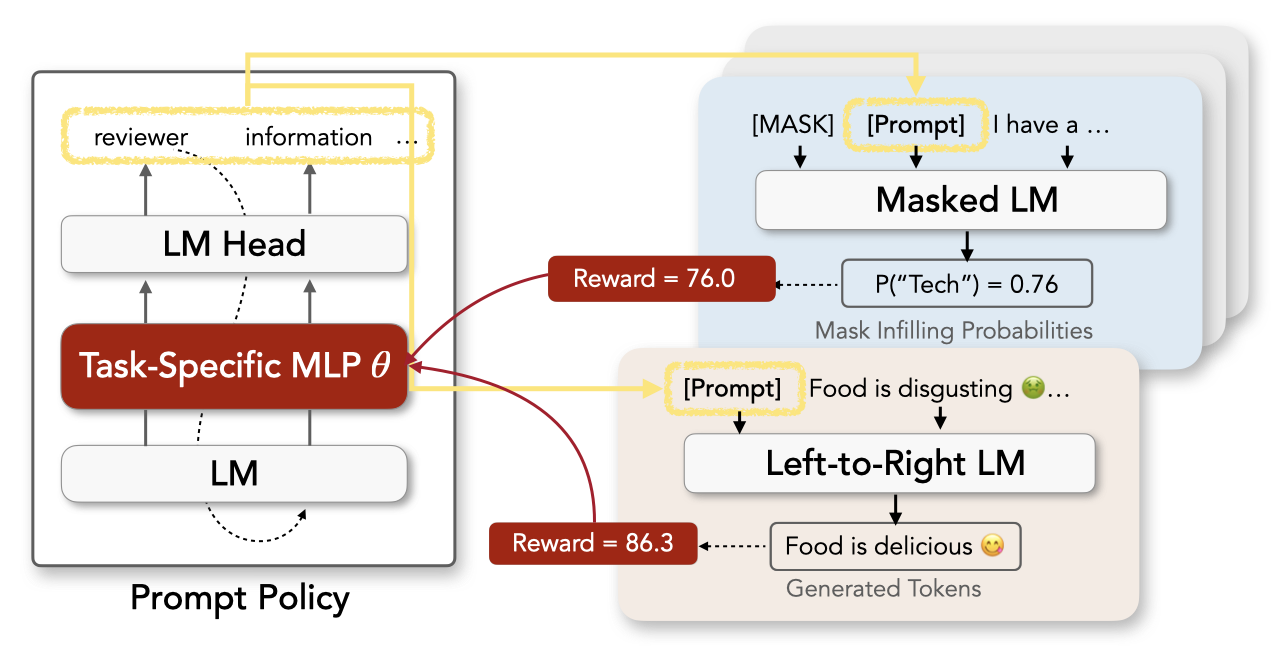

Mingkai Deng*, Jianyu Wang*, Cheng-Ping Hsieh*, Yihan Wang, Han Guo, Tianmin Shu, Meng Song, Eric P. Xing, Zhiting Hu EMNLP 2022 paper / code An efficient and flexible framework for using RL to optimize prompts of discrete text that enable pre-trained LMs (e.g., BERT, GPT-2) to perform diverse NLP tasks. Experiments on few-shot classification and unsupervised text style transfer show superior performance to a wide range of existing methods. |

|

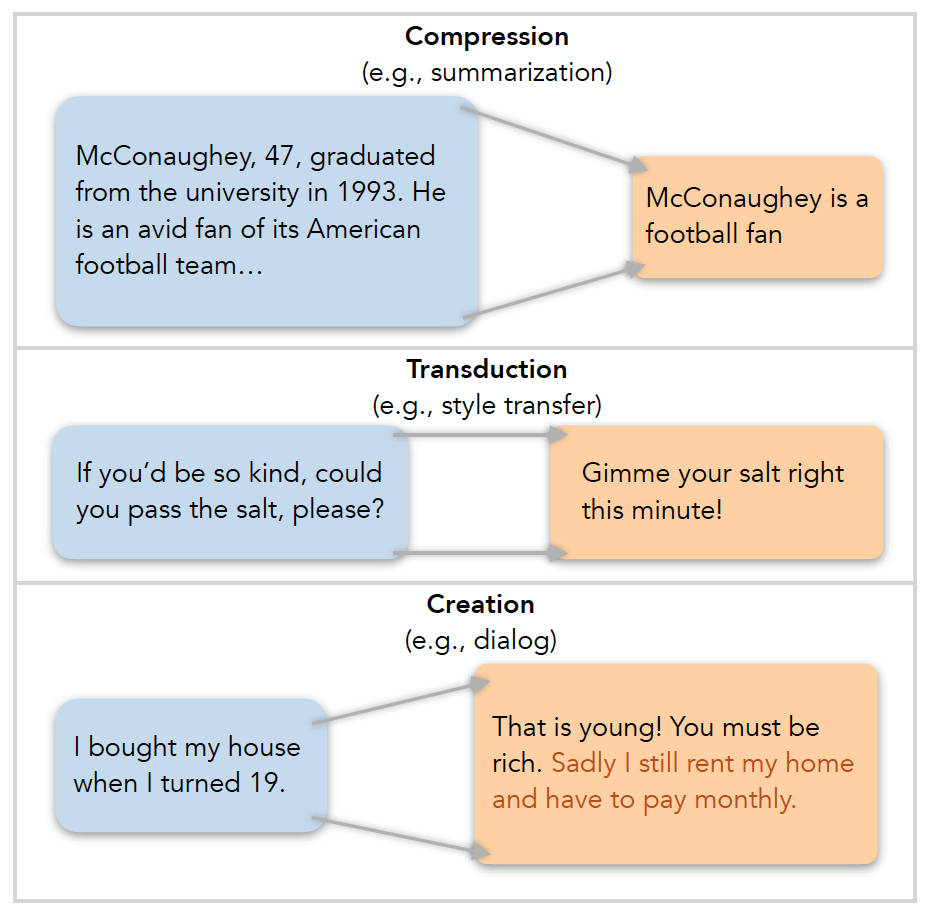

Mingkai Deng*, Bowen Tan*, Zhengzhong Liu, Eric P. Xing, Zhiting Hu EMNLP 2021 paper / slides / blog / code / open-source library A general framework that helps solve the difficulty of evaluating natural language generation (NLG) with a single unified operation. Inspired evaluation metrics improve over SOTA metrics for diverse NLG tasks. Our metrics are available as library on PyPI and GitHub. |

|

|

|

(Alphabetical Order) Brandon Chiou, Mason Choey, Mingkai Deng, Jinyu Hou, Jackie Wang, Ariel Wu, Frank Xu, Zhiting Hu, Hongxia Jin, Li Erran Li, Graham Neubig, Yilin Shen, Eric P. Xing Maitrix.org website / code / demo An open-source web agent that operates in a Chromium-based browser and can perform a broad range of realistic tasks that require complex, long-range, and goal-achieving behavior, such as searching for flights, compiling online shopping options, and researching news coverage. |

|

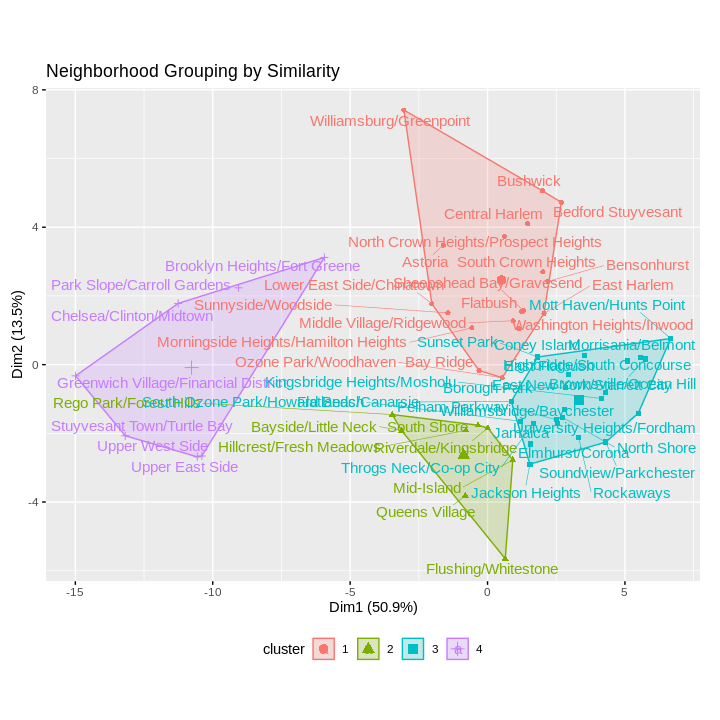

Mingkai Deng, Jerry Shi, Yvonne Zhou ASA DataFest 2018 (Best Insights Award) slides / code A data-driven narrative of NYC gentrification patterns over time and their second-order impact on the city's inhabitants. Construct significant leading predictors using resources from NYC OpenData. |

|

|

|

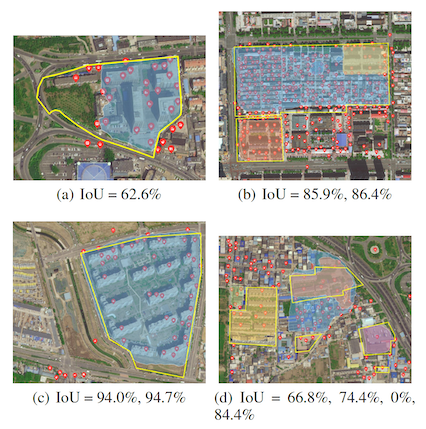

Mingkai Deng*, Guanglei Du*, Xinjiang Lu, Jingbo Zhou, Jing Sun, Yiming Zhang Preprint 2019 paper Combine structured point-of-interest (POI, e.g., parking lots and buildings) data with unstructured satellite image data to automatically identify and draw the boundaries of urban areas-of-interest (e.g., residential areas and campuses). |

|

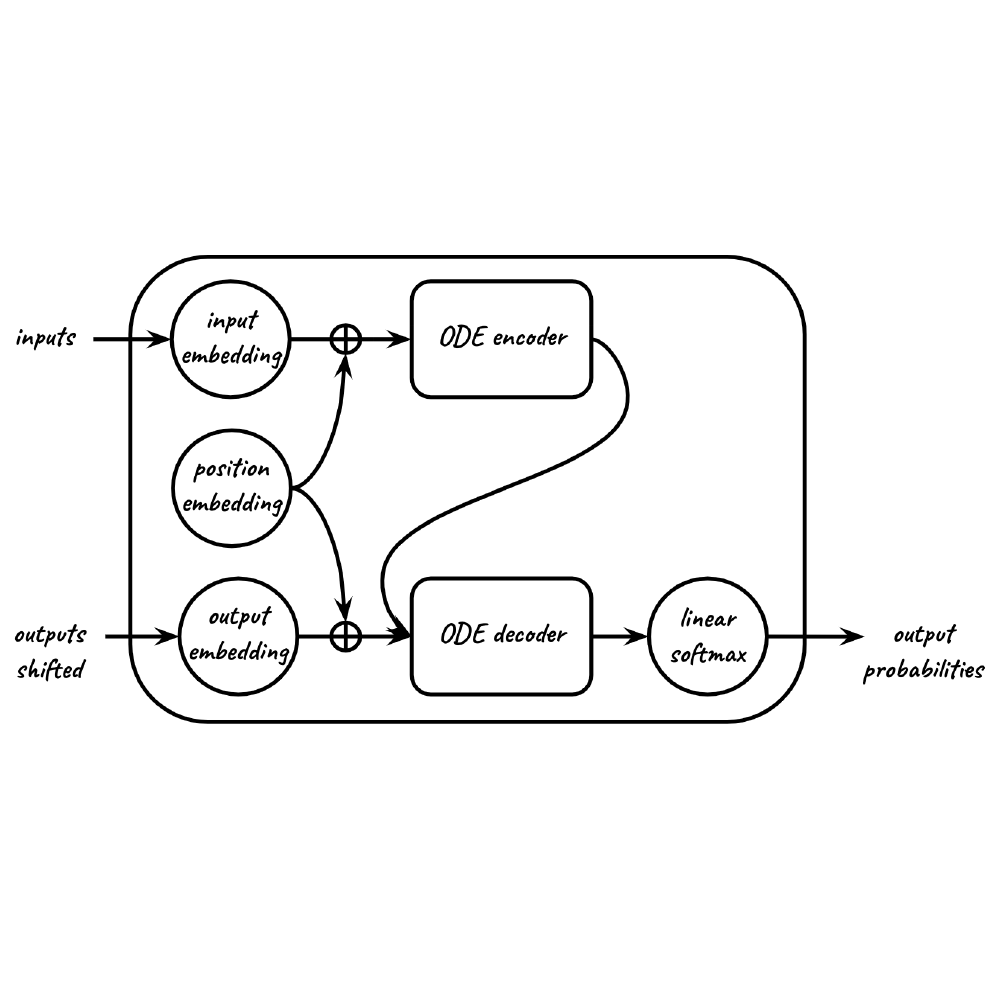

Mingkai Deng, Biqing Qiu, Yanda Chen, Iddo Drori Preprint 2019 paper / code Formulate the Transformer model as an ordinary differential equation (ODE) and perform forward-backward operations using an ODE solver. The resulting continuous-depth model has fewer parameters, converges faster, and shows promising performance in empirical experiments. |

|

The source code of this website is adapted from here |